The Tbilisi Tutorial on CQPweb and XML

Tbilisi, September 14, 2015, Workshop on Specialized Corpora, Noah Bubenhofer

Part 1: Working with CQPweb – Distributions of Word Frequencies

CQP is an important query language for corpora, for example used in the Open Corpus Workbench. In combination with CQPweb, it is easy to query corpora. The following introduction shows some basic functions of CQP in CQPweb. The Text+Berg-Korpora are just one example of corpora using the CWB system. A more in depth tutorial in German using CWB can be found here: Einführung in die Korpuslingustik: CQPweb

- Go to the address: http://www.textberg.ch/ and apply for an account to use the corpora.

- Login with the username and password you have received after the registration process.

- Choose one of the Text+Berg-Corpora:

"Text+Berg-Korpus R151v01 Alpine Journal" is in English

"Text+Berg-Korpus R151v01 SAC (deutsch)" is in German

"Text+Berg-Korpus R151v01 Echo des Alpes" is in French

It is assumed in the following steps that you are working with the English version of the corpus. - After having chosen a corpus you will come to the query interface:

- Note: There is a "simple query mode" and the "CQP syntax" mode you can choose right below the entry field. Using the simple query mode, you can just enter a keyword an click "Start Query" to do the query. But there are no other possibilities to access annotation layers or use regular expression etc. So in the next steps, the "CQP syntax" mode is used.

- The most important search expressions in the CQP mode:

- "mountain" -> searching for the exact word form

- [word="mountain"] -> same effect as term above

- [lemma="tree"] -> searching the lemma (base form, stem) of the word; also trees will be found

- [pos="JJ"] -> searching words tagged as adjective; see the tagset for English here

- [pos="JJ"] [lemma="mountain"] -> searching for an adjective followed by the lemma mountain

- [pos="JJ"] [lemma="mountain.*"] -> searching for an adjective followed by a word starting with mountain (or only mountain)

- [pos="JJ"] []{0,4} [lemma="mount.*"] -> searching for an adjective followed by a sequence of zero to four words and then followed by a word starting with mountain (or only mountain)

- [pos="JJ"] [word!="[\.,]"]{0,4} [lemma="mountain.*"] -> searching for an adjective followed by a sequence of zero to four words (but no full stops and/or commas) and then followed by a word starting with mountain (or only mountain)

- Key Word in Context View: Click on the key word to get more context. Click on the filename to get the meta data to the match.

- Use the top right menu named "New query" to choose further features. The most important are discussed in the following steps:

- Frequency breakdown...: See an aggregated list of the different matches with frequency counts.

- Distribution: See how the matches spread over the meta data available in the corpus, e.g. over time, register etc. In the Text+Berg-corpora you can choose "decade" in the menu "Categories" at the very top left corner of the page. In addition you can choose "Bar Chart" in the menu "Show as..." at the top right corner of the page. Then click "go" and the following bar chart appears:

- Collocations...: Calculate the list of collocations. Collocations are the words appearing significantly together with the searched key word. You first have to choose the parameters the collocations should be calculated width:

Afterwards you get a list of the collocations and you can refine the parameters at the top of the page:

Another important feature is to create subcorpora

We want to define two subcorpora: One containing all texts from the 1970ies and one with the texts from the years after 2000. The idea is to find the vocabulary which is specific for the two time periods.

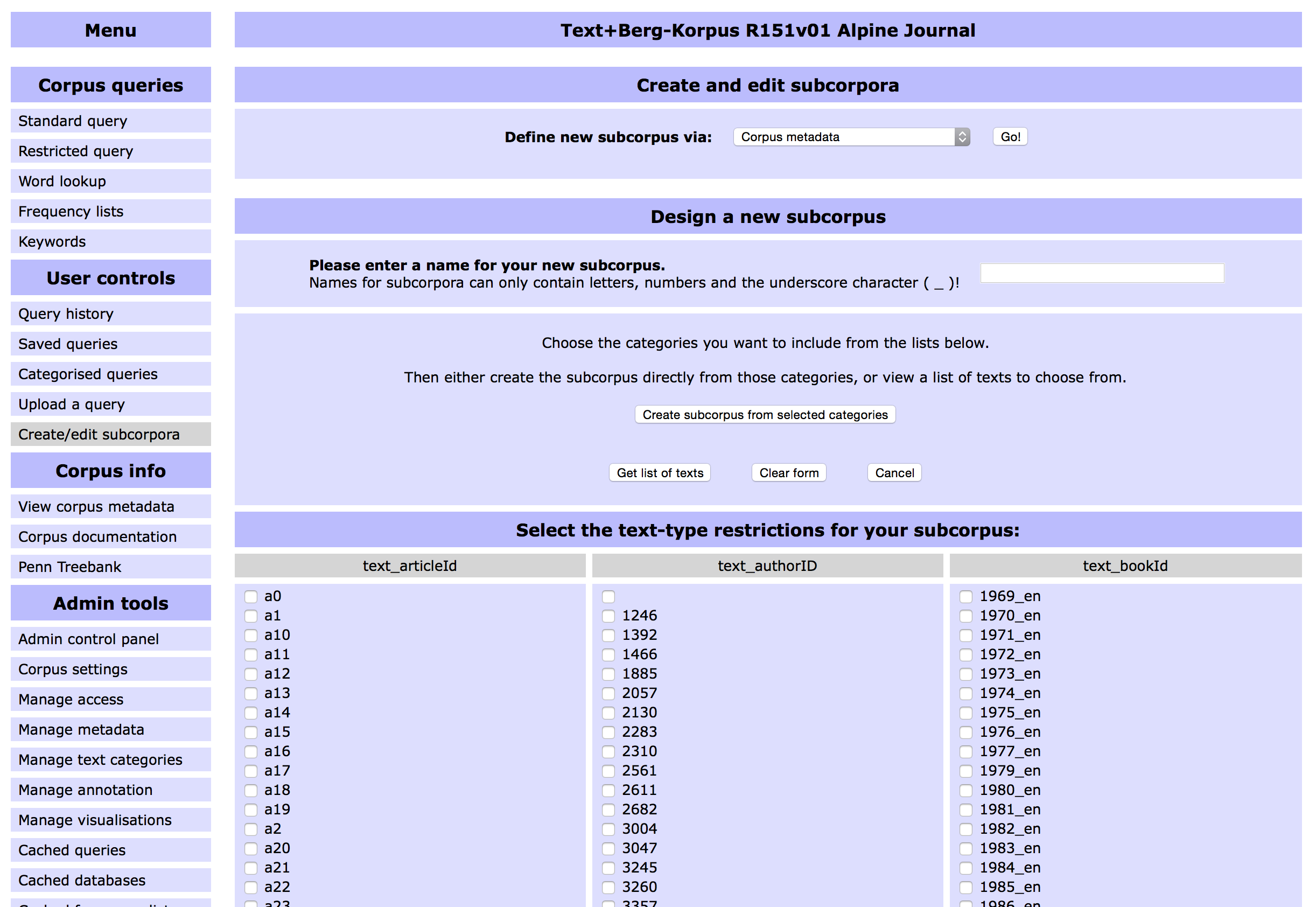

- In the menu User controls: Create/edit subcorpora you can define a subcorpus in your corpus.

- You have different possibilities how to define it. It is most useful to define a new subcorpus via corpus metadata: use the command "corpus metadata" in the menu "Define new subcorpus via".

- Enter a name for the subcorpus, e.g. "corpus1970" and "corpus2000" and select the metadata values which meet your corpus definition. In our case we would best use the "decade"-section and tick die 1970 button for the first and the 2000 button for the second corpus.

- Now you see all the subcorpora you have defined so far. To work with them, you have to click on "compile" in the column "Frequency list" of each subcorpus.

- After having defined the subcorpus you can:

- restrict your search to this subcorpus by selecting it in the menu "restriction" below the entry field of the search page.

- use the sub corpus to calculate the keywords which are significant for the subcorpus compared to a reference corpus. Use the menu "Keywords" in the menu section "Corpus queries". Note: you have different possibilities for comparision – we will discuss that together!

- You then get a list of keywords ranked by significance. The higher the significance value (log likelihood), the more typical the word is for the subcorpus. But please note, that in default view positive and negative keywords are displayed! That means: "positive" keywords are the keywords which are typical for the first subcorpus you have chosen, negative ones for the second.

- So, now interpretation comes into play: Do you see a pattern of change of language use? What are the general differences between the words which are typical for the 1970ies comparted to the 2000 years?

Part 2: XML Formatting

TEI Text Encoding Initiative

- TEI website: http://www.tei-c.org

- TEI guidelines: http://www.tei-c.org/Guidelines/P5/

- Templates

- All.xml: Genereal purpose template for one document

- Corpus.xml: Genereal purpose template for a corpus of documents

Text Editors

- Mac: Textwrangler

- Linux: gedit (already installed)

- Windows: Notepad++, Textpad

- All: Sublime Text, jEdit (requires Java)

XML Editors

- Oxygene (commercial, trial version available)

- Altova XMLSpy (commercial, trial version available)

- Javascript Web editor jquery.xmleditor (must be installed on a server)

- Wikipedia: Comparison of XML editors

Regular Expressions

Regular expressions are a possibility to search for complex patterns in a text and replace this pattern bei something else or a modified version of this match. Consider a file with a lot of texts in it, each text begins with the following header:

Title of Text No 1 Author Name Here begins the text with a lot of paragraphs. Here is another paragraph. At the end of the text there are let's say three blank lines, followed by the next text. Title of Text No 2 Author Name Here comes the body of the second text. Title of Text No 3...

You want to convert this texts into the following XML form:

<TEI xml:id="MyTextNumber1">

<teiHeader>

<fileDesc>

<titleStmt>

<title>Title of Text No 1</title>

<author>Author Name</author>

</titleStmt>

</fileDesc>

</teiHeader>

<text>

<body>

<div>

Here begins the text with a lot of paragraphs.

Here is another paragraph.

At the end of the text there are let's say three blank lines,

followed by the next text.

</div>

</body>

</text>

</TEI>

In order to produce this structure, we need to identify the text units in the text file, identify its parts (title, author, body) and put it into the xml format. The approach is to say something like:

Search for a line of text (the title) followed by a blank line, followed by another line of text (the author line), followed by a blank line, followed by several lines, also with blank lines (the body of the text), followed at the end by three blank lines.

Put this statement in a regular expression:

.+\n\n.+\n\n[\w\W]+?\n\n\n\n

Let's analyze this expression:

. (the dot): matches any character, digit and also blanks

+: the preceding character must appear at least once

\n: line break

Therefore the expression .+\n\n matches a line of text followed by a blank line. A blank line is produced by two line breaks: One line break to end the character line, another to end the blank line. In the expression we have twice this pattern to match the title and the author line. Afterwards we see the expression [\w\W]+:

[]: character class; matches characters of the designated class, e.g. [0-9] matches a digit between 0 and 9

\w: shorthand for any word character

\W: any non-word character

Using [\w\W]+ we match an unlimited number of lines with words or non words (also with line breaks). So we should match all the paragraphs of the text body. Now we have to end the matching of paragraphs where the three blank lines appear. As three blank lines are produced by four line break characters (the last line with text ist broken by the first line break), we should define [\w\W]+\n\n\n\n. But as our class [\w\W]+ also matches several blank lines in sequence, this expression is greedy and matches everything (including "three blank lines" sequences) until the last "three blank lines" sequence. That's not what we want. Instead we want the expression to find the first solution where the sequence of paragraphs ends with three blank lines. Therefore we add a "laziness" (or reluctant) sign after the plus. That is the question mark:

[\w\W]+?\n\n\n\n

So now with .+\n\n.+\n\n[\w\W]+?\n\n\n\n we match a text and we know, what parts of the expression are title, author line and body of the text. As we want to divide these parts and embrace them with xml elements, we need to memorize them. We use brackets for that:

(.+)\n\n(.+)\n\n([\w\W]+?)\n\n\n\n

Now we define a replace pattern where we construct the xml structure. The memorized content in the brackets can be recalled in the replace expression using \1 for the first bracket, \2 for the second and so on. Depending on the text editor you are using or the programming language also $1, $2 etc. must be used.

For the replace expression we just use the desired xml structure putting \1, \2 and \3 at the locations where we want to fill in title, author line and body of the text:

<TEI xml:id="MyTextNumber1">

<teiHeader>

<fileDesc>

<titleStmt>

<title>\1</title>

<author>\2</author>

</titleStmt>

</fileDesc>

</teiHeader>

<text>

<body>

<div>

\3

</div>

</body>

</text>

</TEI>\n

Please note: depending on the behaviour of your text editor, you should omit the line breaks and tabs from the replace expression and replace them by \n (line breaks) and \t (tabs):

\t<TEI xml:id="MyTextNumber1">\n\t\t\t<teiHeader>\n\t\t\t\t<fileDesc>\n\t\t\t\t\t¶ <titleStmt>¶ \n\t\t\t\t\t\t\t<title>\1</title>\n\t\t\t\t\t\t\t<author>\2</author>¶ \n\t\t\t\t\t </titleStmt>\n\t\t\t\t</fileDesc>\n\t\t\t</teiHeader>¶ \n\t\t\t<text>\n\t\t\t\t<body>\n\t\t\t\t\t <div>\n\t\t\t\t\t\t\t\3\n\t\t\t\t\t¶ </div>\n\t\t\t\t</body>\n\t\t\t</text>\n\t </TEI>\n

This is a ugly single line expression, but it works as expected...

Here you have a screenshot of this search and replace operation using Sublime Text editor:

A lot more is possible using regular expressions. Please consider the following tutorials to learn more about that!

- intro regular expressions: http://regexone.com/

- more detailed: http://www.regular-expressions.info

Appendix

Useful Web Links for Building your Own Corpus

- Crawling Webpages

- Firefox Plugin DownThemAll!

- Standard unix programs wget and curl

- BootCat: Simple Utilities to Bootstrap Corpora And Terms from the Web

- Transformation from html to xml using XSLT; here is a useful manual in German and here some in English.

- Part-of-Speech-Tagger (only selection, search also for language specific taggers):

- TreeTagger (for several languages)

- RFTagger for the annotation of fine-grained part-of-speech tags, German, Czech, Slovene, Slovak, and Hungarian

- Stanford NLP Tagger, English

- CLAWS Tagger, English

- Corpus concordancer, query systems

- Very easy, not for annotated corpora: AntConc

- For annotated data, the pro tool: Open Corpus Workbench

- Corpuscle: http://clarino.uib.no/korpuskel/page

- Complex systems:

- In weblicht you can build your own corpus using several computational linguistic tools in a graphical user interface.

- TXM platform for working with corpora (querying but also statistical analysis) and some NLP tools available (under the hood: Corpus Workbench)

- Natural Language Toolkit for Python: If you don't shy away from programming, then this is for you!

- Perl Programming Language, the alternative to Python, a scripting language which is invented by a linguist and therefore nice to work with in corpus linguistics

(The Very Incomplete List Of) Existing Corpora

- http://corpus.byu.edu English, Spanish, Portuguese

- British National Corpus

- Deutsches Referenzkorpus -> COSMAS II

- Cooccurrences Database of the Deutsches Referenzkorpus

- Digitales Wörterbuch Deutscher Sprache mit verfügbaren Korpora

- Wortschatz Universität Leipzig, 230 languages, but not a corpus in a strict sense

- Text+Berg-Korpora (corpora with texts about mountaineering (mainly) in German, French and English)